Sharing GPU for Machine Learning/Deep Learning on VMware vSphere with NVIDIA GRID: Why is it needed? And How to share GPU? - VROOM! Performance Blog

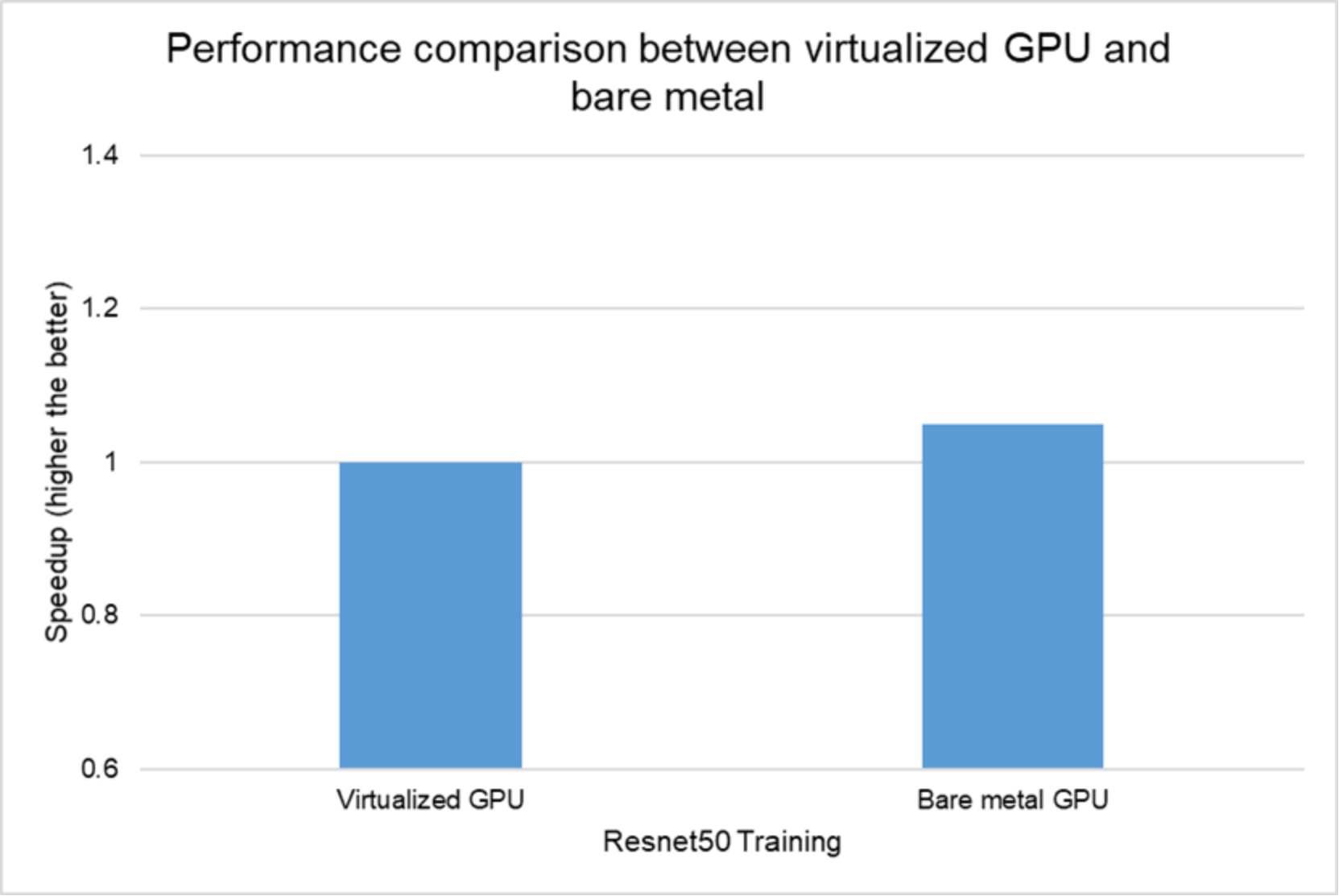

Performance results | Design Guide—Virtualizing GPUs for AI with VMware and NVIDIA Based on Dell Infrastructure | Dell Technologies Info Hub

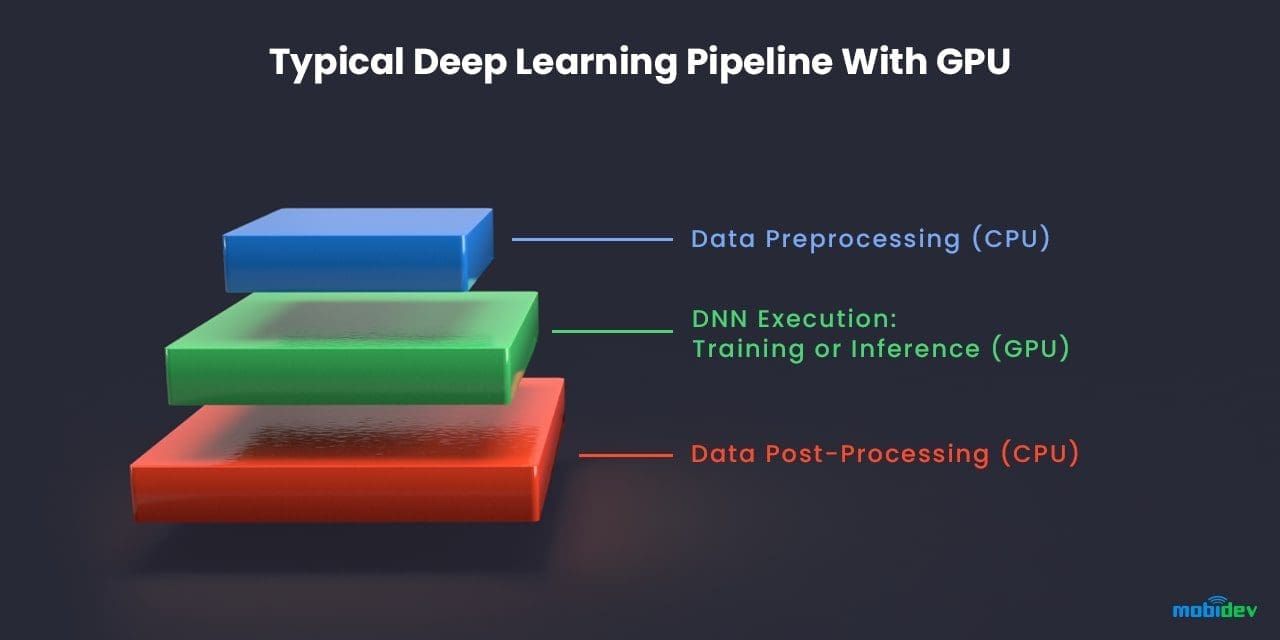

Accelerate computer vision training using GPU preprocessing with NVIDIA DALI on Amazon SageMaker | AWS Machine Learning Blog

Fast, Terabyte-Scale Recommender Training Made Easy with NVIDIA Merlin Distributed-Embeddings | NVIDIA Technical Blog

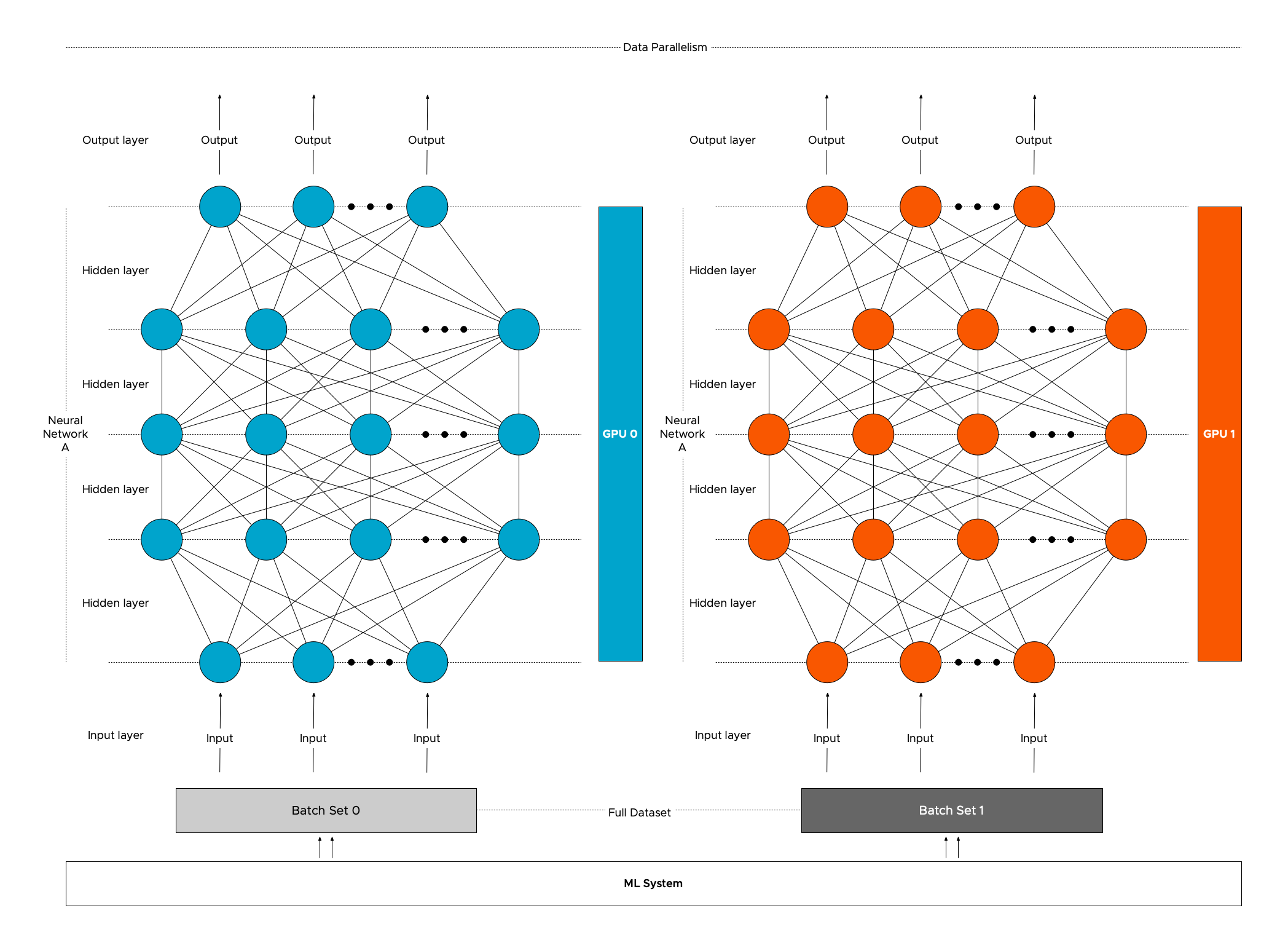

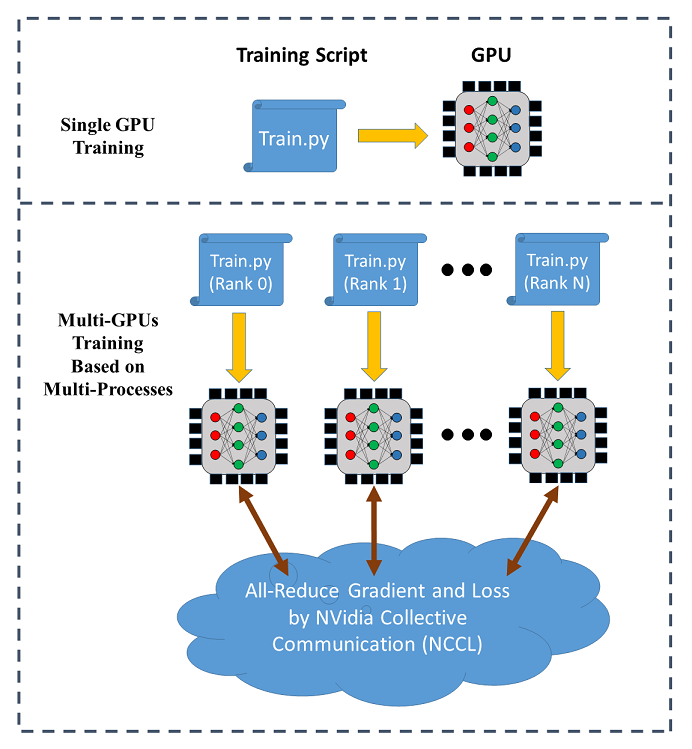

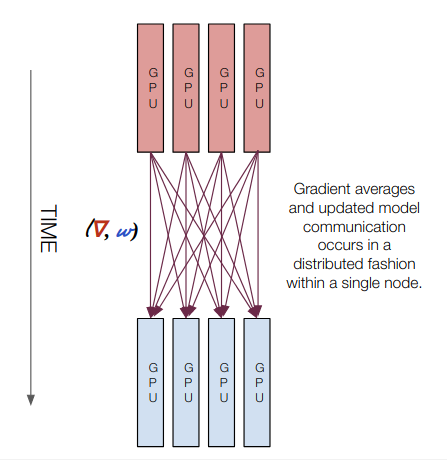

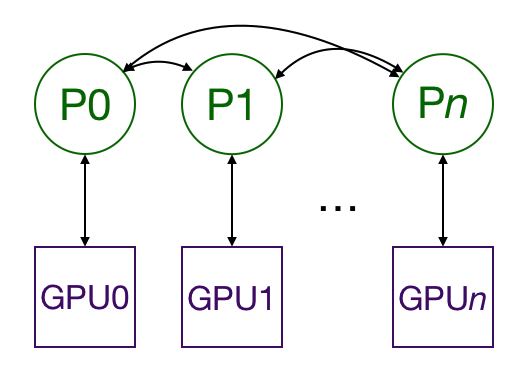

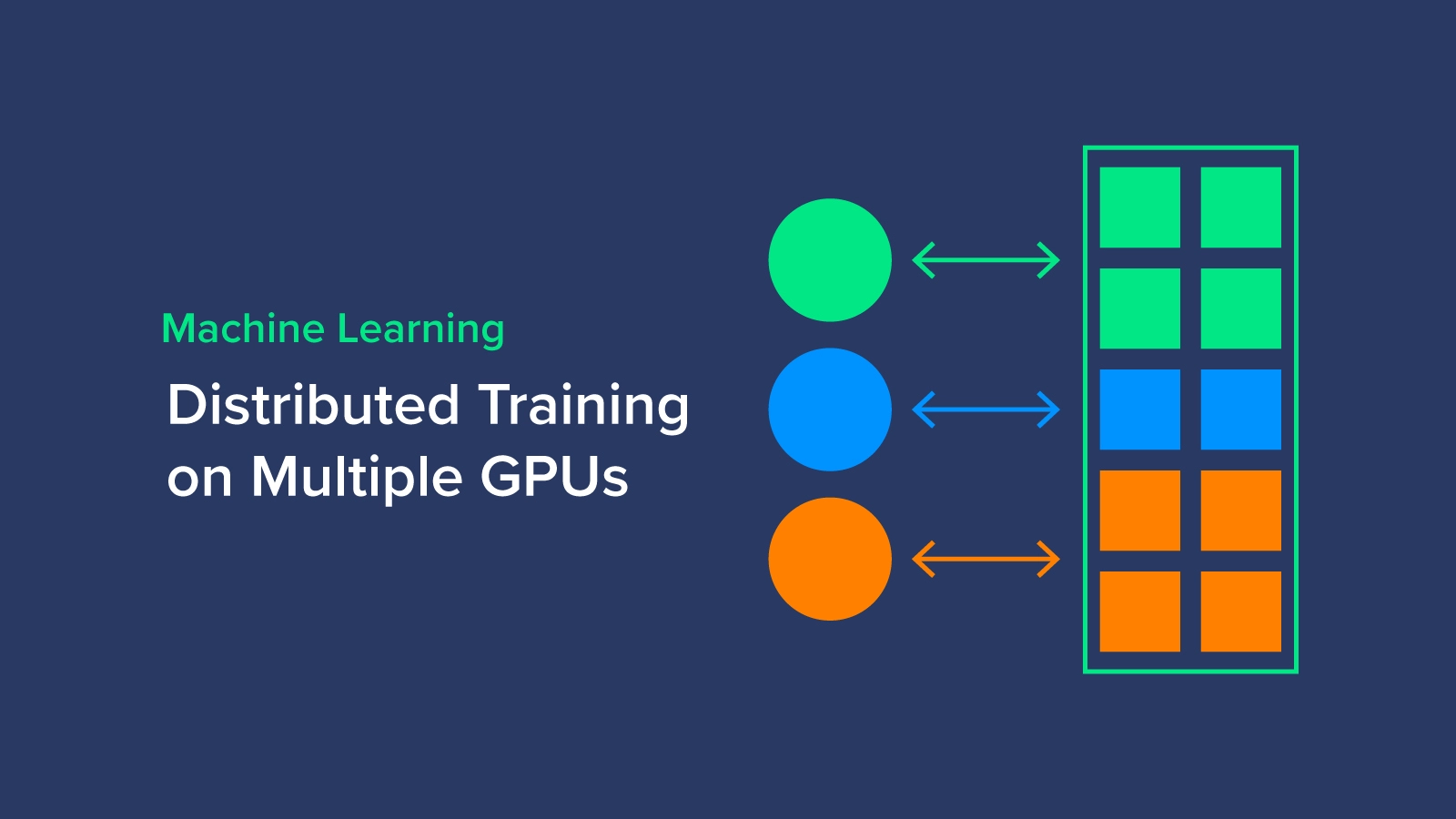

Multi-GPU and distributed training using Horovod in Amazon SageMaker Pipe mode | AWS Machine Learning Blog

Monitor and Improve GPU Usage for Training Deep Learning Models | by Lukas Biewald | Towards Data Science

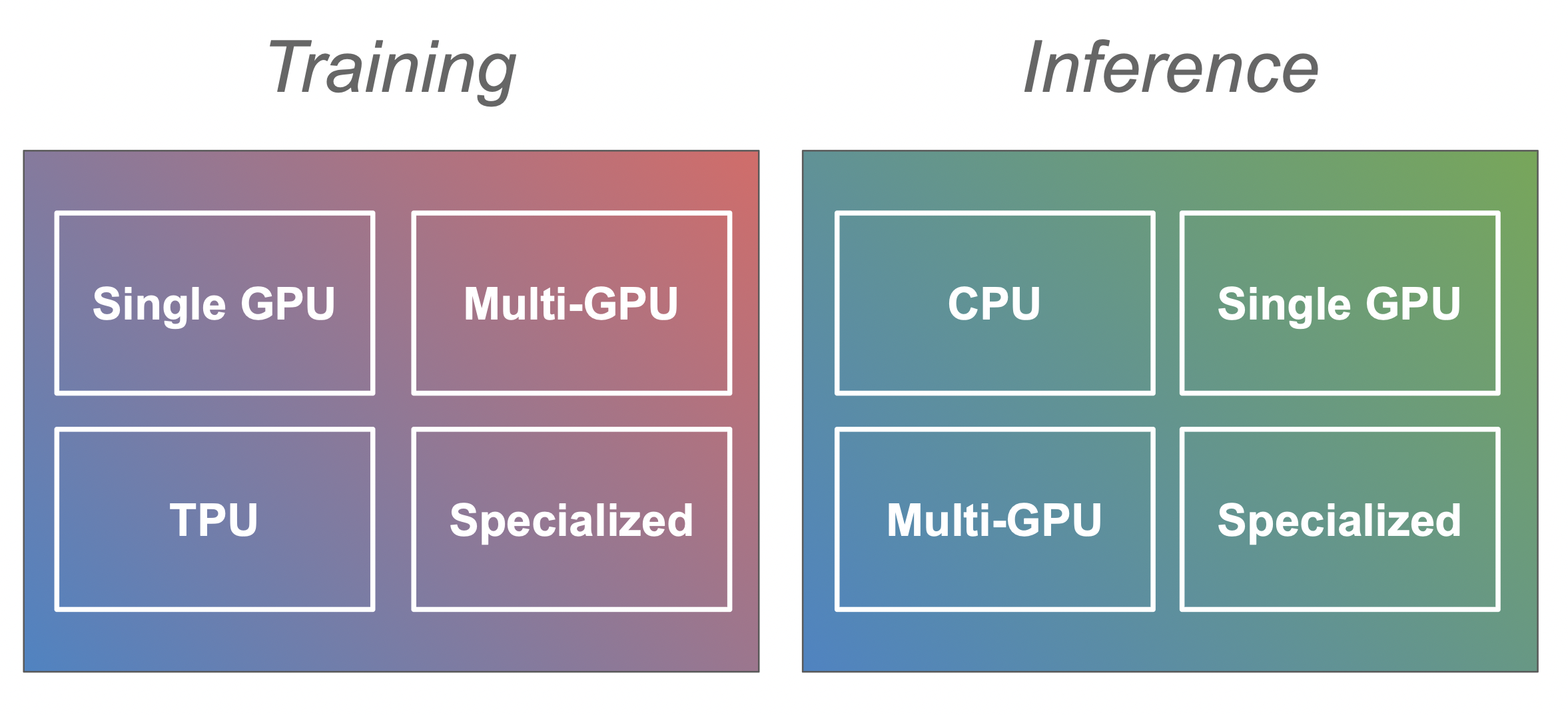

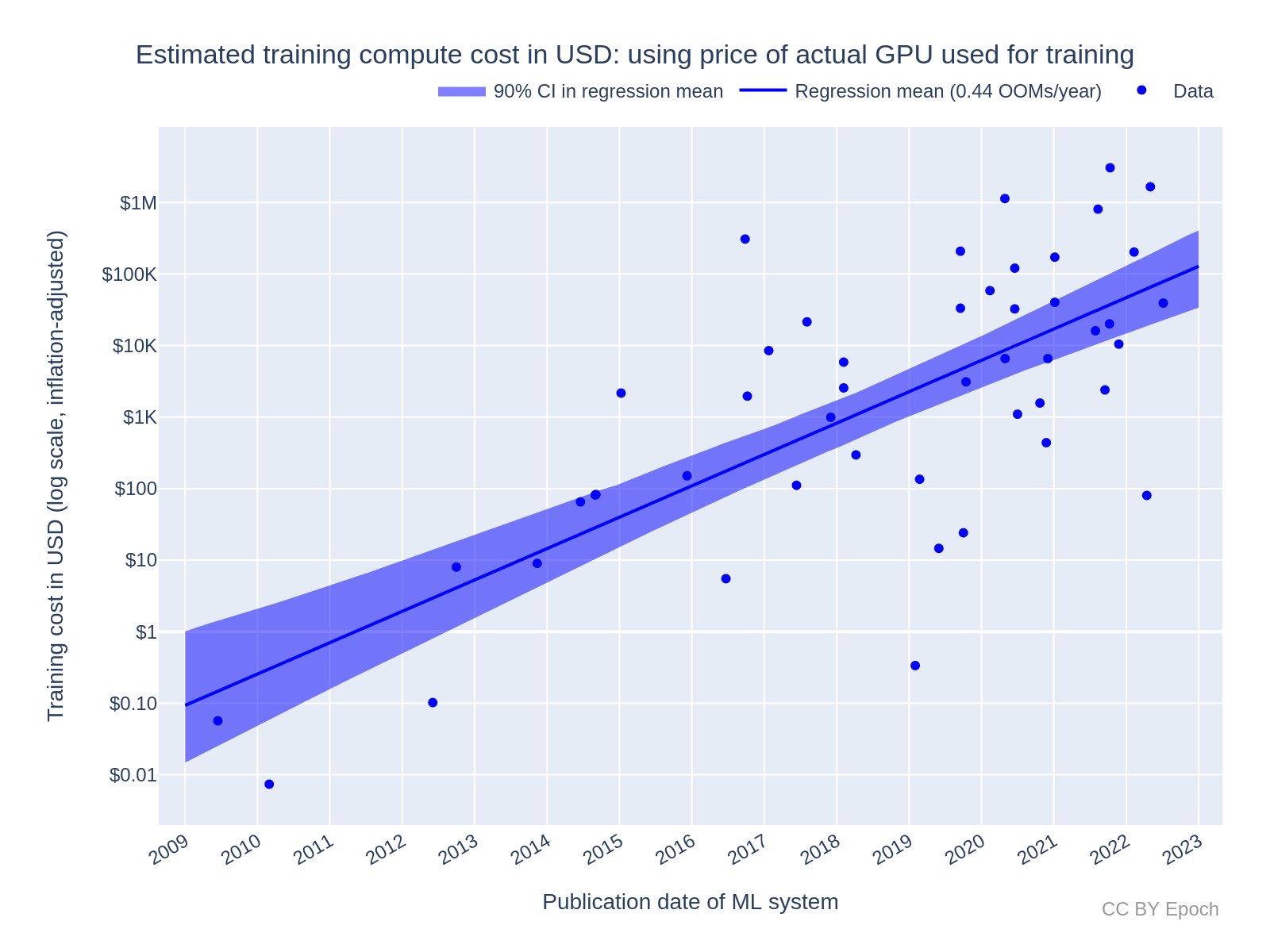

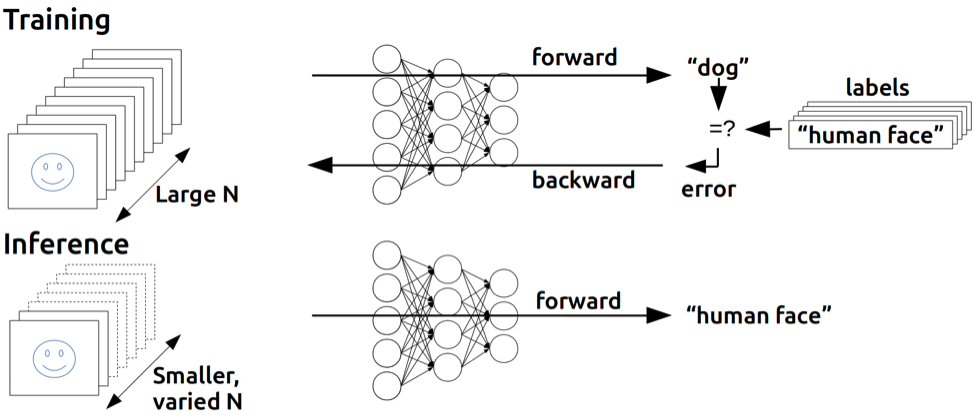

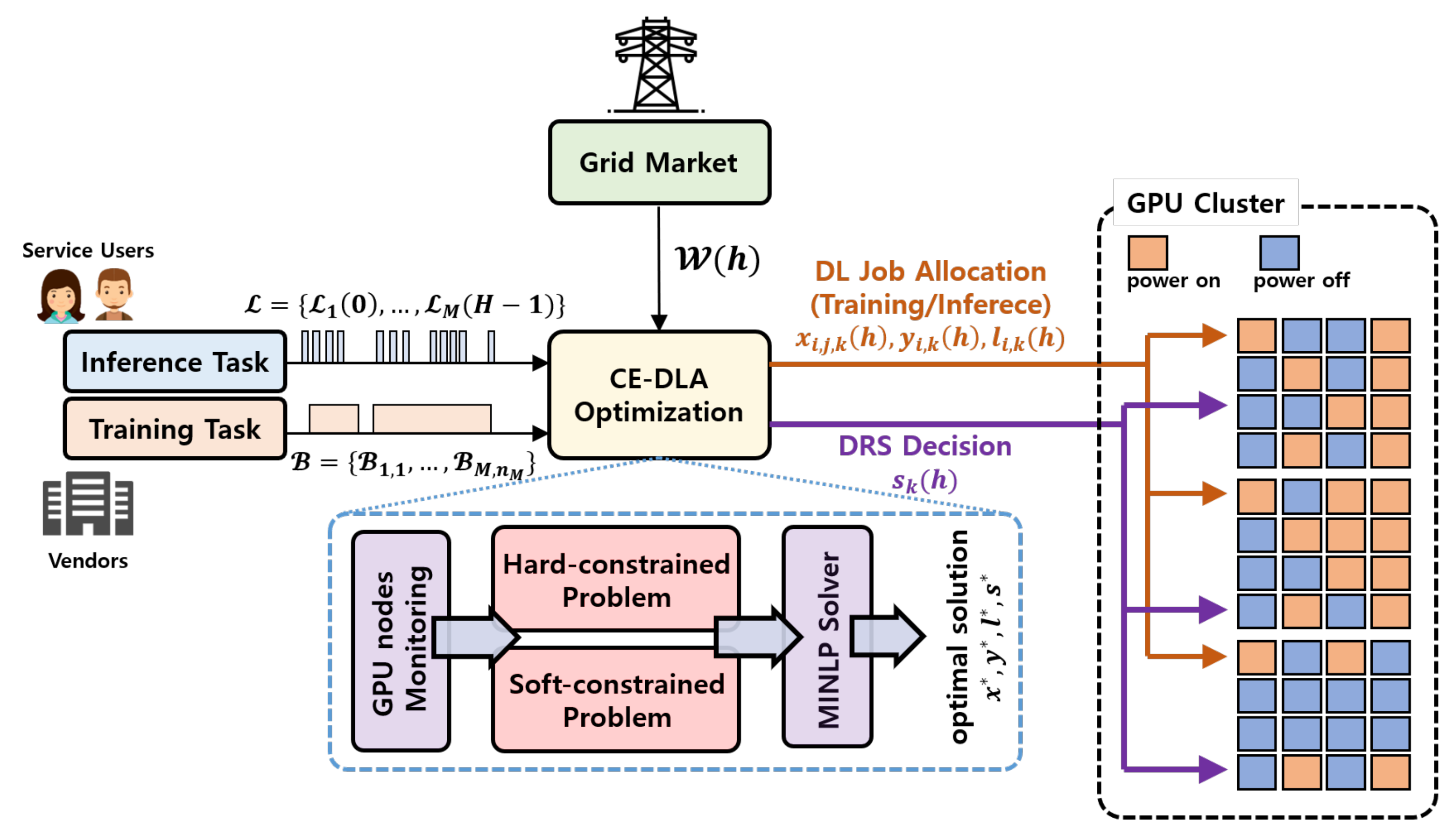

Energies | Free Full-Text | Cost Efficient GPU Cluster Management for Training and Inference of Deep Learning